A longstanding question in vision research is how we perceive a 3D world from the 2D images projected onto our retinas. My research focuses on 3D vision and its role in guiding actions. We employ motion tracking and eye-tracking technology to conduct experiments in both virtual reality and real-world environments.

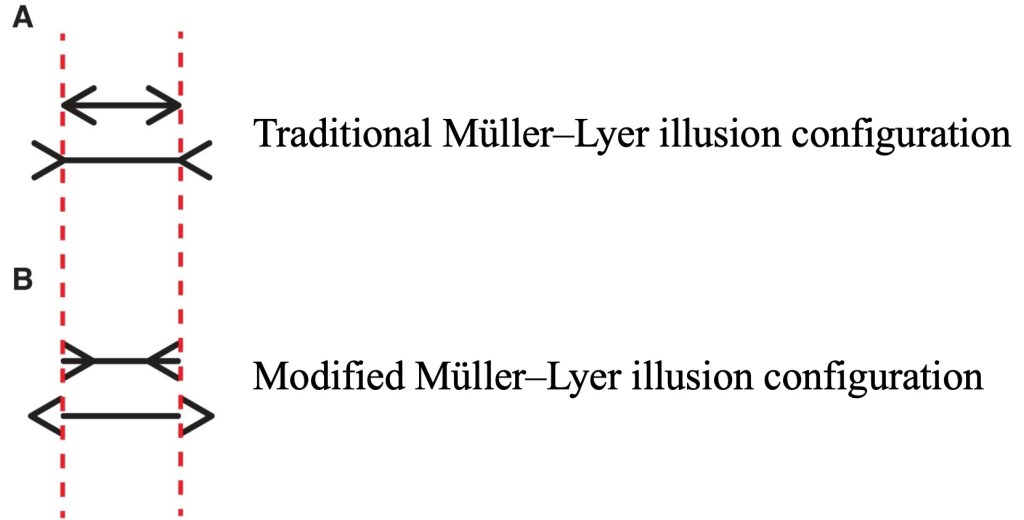

Eye movement in Müller–Lyer Illusion

Abstract: This study examined the relationship between perception and oculomotor control in response to visual illusions. While both perception and saccades were influenced by the Müller–Lyer illusion, a modified setup with a weaker perceptual illusion did not produce a comparable reduction in saccadic bias. Instead, the saccadic effect may partially result from the center-of-gravity (CoG) effect rather than solely from illusory perception. Additionally, the lack of trial-by-trial correlation between perceptual and saccadic effects suggests largely independent sources of noise for perception and oculomotor control.

For more detail, please see https://journals.physiology.org/doi/full/10.1152/jn.00166.2020

3D Slant perception in stereo-blind subjects

Research question: This project investigated how the longterm experience of stereoblindness influences individuals’ 3D slant perception.

3D slant perception: The visual system’s ability to estimate the orientation of a surface relative to the observer’s line of sight in three-dimensional space.

Stereo vision: the computation of depth based on the binocular disparity between the images of an object in left and right eyes.

Stereo-blind: 4~14% of the population cannot perceive depth through stereoscopic cues.

Abstract: Stereopsis is an important depth cue for normal people, but a subset of people suffer from stereoblindness and cannot use binocular disparity as a cue to depth. Does this experience of stereoblindness modulate use of other depth cues? We investigated this question by comparing perception of 3D slant from texture for stereoblind people and stereo-normal people. Subjects performed slant discrimination and slant estimation tasks using both monocular and binocular stimuli. We found that two groups had comparable ability to discriminate slant from texture information and showed similar mappings between texture information and slant perception (biased perception toward frontal surface with texture information indicating low slants). The results suggest that the experience of stereoblindness did not change the use of texture information for slant perception. In addition, we found that stereoblind people benefitted from binocular viewing in the slant estimation task, despite their inability to use binocular disparity information. These findings are generally consistent with the optimal cue combination model of slant perception.

For more detail, please see https://jov.arvojournals.org/article.aspx?articleid=2778741

Reaching-to-grasp in stereo-blind subjects

Research question: This project investigated how the longterm experience of stereoblindness influence individuals’ visuomotor control.

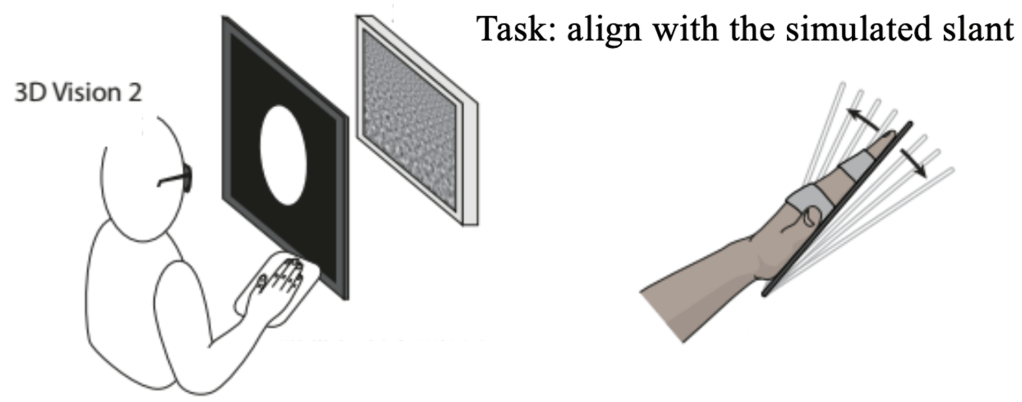

The main task in current study

Abstract: Reach-to-grasp objects is a fundamental action for humans and is heavily reliant on stereoscopic vision. However, it remains unclear how reach-to-grasp performance is affected in the long-term absence of stereoscopic information, or say, what consequences come with such absence. To address these issues, we recruited 12 stereo-blind and 12 typically developed individuals to compare their performances in slant-matching tasks and reach-to-grasp tasks, respectively. The slant-matching task revealed no significant differences between the two groups on slant perception, consistent with previous research (Yang et al, 2022). In contrast, the grasping task revealed that the stereoblind group approached the target more quickly and initiated grip alignment later, suggesting a greater reliance on online visuomotor feedback in grasping objects compared to the stereo-normal people. These findings suggest that in the absence of stereoscopic vision, the brain adapts by shifting the balance between feedforward and feedback mechanisms. This compensation highlights the plasticity of the visuomotor system, which reorganizes to optimize performance under sensory constraints. Understanding these adaptations provides insight into the neural strategies employed in visuomotor tasks when binocular disparity cues are unavailable, with implications for rehabilitation approaches in individuals with visual impairments.

The manuscript is accepted for publication in IOVS. For more detail, please see https://doi.org/10.31219/osf.io/7tfdn_v5

Cue combination in slant perception

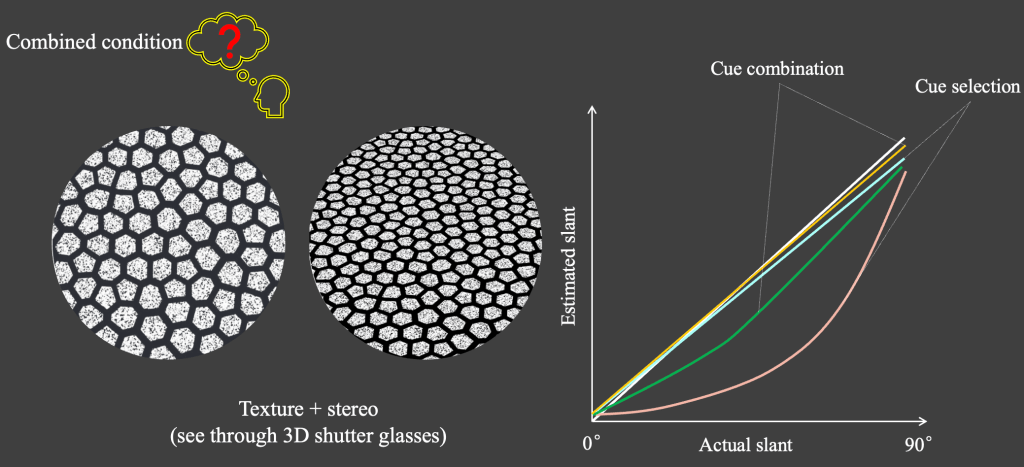

Research question: This project investigated whether and how different cues are integrated to form 3D slant perception.

The reliability of texture cue is a function of the degree of slant.

One important cue for slant perception is texture, where the changes in texture density, shape, or orientation provide information about surface tilt. However, research has shown that the relationship between actual slant and perceived slant is nonlinear—meaning our perception does not always match the physical reality in a straightforward way.

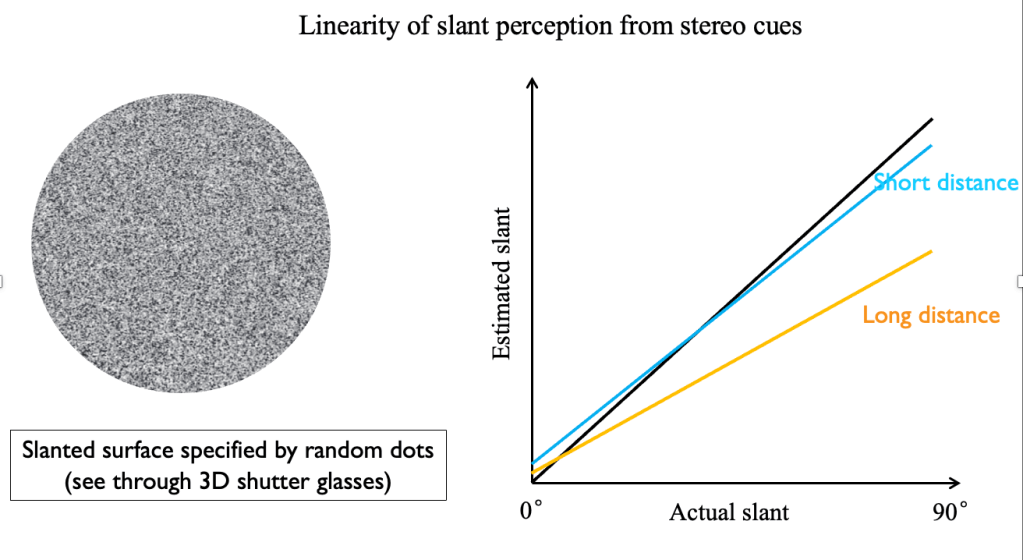

The reliability of stereo cue is a function of the viewing distance.

Slant perception from stereo cues (binocular disparity) refers to how we use the differences between the images seen by our left and right eyes to estimate surface tilt. Unlike slant perception from texture cues, which often exhibits nonlinear distortions, slant perception from stereo cues tends to be more linear—meaning that perceived slant closely follows actual slant in a nearly proportional manner.

The slant estimation in combined condition is still debatable.

Abstract: Numerous studies have shown that perceived 3D slant is biased, especially when depth information is limited, yet the underlying mechanism remains debated. An Ideal Observer explanation proposes that visual cues are combined with a prior weighted toward a default (frontal) slant, leading to biases when cues are less reliable. To distinguish this theory with the explanations that focus on the nonlinear mapping between simulated slant and optical information that people use to perceive slant, we investigated how biases of slant perception vary when the reliability of stereo information was changed. Subjects viewed images of slanted planar surfaces with informative (Voronoi) or uninformative (broadband noise) surface texture and estimated slant by aligning their palms. We manipulated the viewing distance (90 vs. 180 cm) to alter stereo cue reliability. Subjects also performed 2AFC slant discrimination to directly measure cue reliability. As expected, decreased reliability of stereo cues was observed with longer viewing distance and decreased reliability of texture cues with decreased simulated slant, respectively. Notably, biases in slant estimation in the presence of stereo cues varied as a function of viewing distance. At the near viewing distance, slant estimates remained close to veridical, irrespective of the availability of texture cues. However, at the longer viewing distance, slant estimates exhibited reduced perceptual gains and a nonlinear response pattern when presented with Voronoi texture. Such effects were not predicted by the models regarding the nonlinear mapping between optical information and simulated slant, but by the Ideal Observer explanation which proposes slant perception as an integration of cue integration and frontal prior. We also found that cue reliability measured from the discrimination task was an effective predictor of measured perceptual gain from the estimation task, regardless of different conditions. All these findings consistently support the Ideal Observer explanation on how human perceive slant with multiple cues.

The manuscript is under review. For more detail, please see https://osf.io/6vxdy and https://jov.arvojournals.org/article.aspx?articleid=2770957. The preprint is available: https://osf.io/preprints/osf/zncx6_v1

Optical texture used in visually-guided-reaching

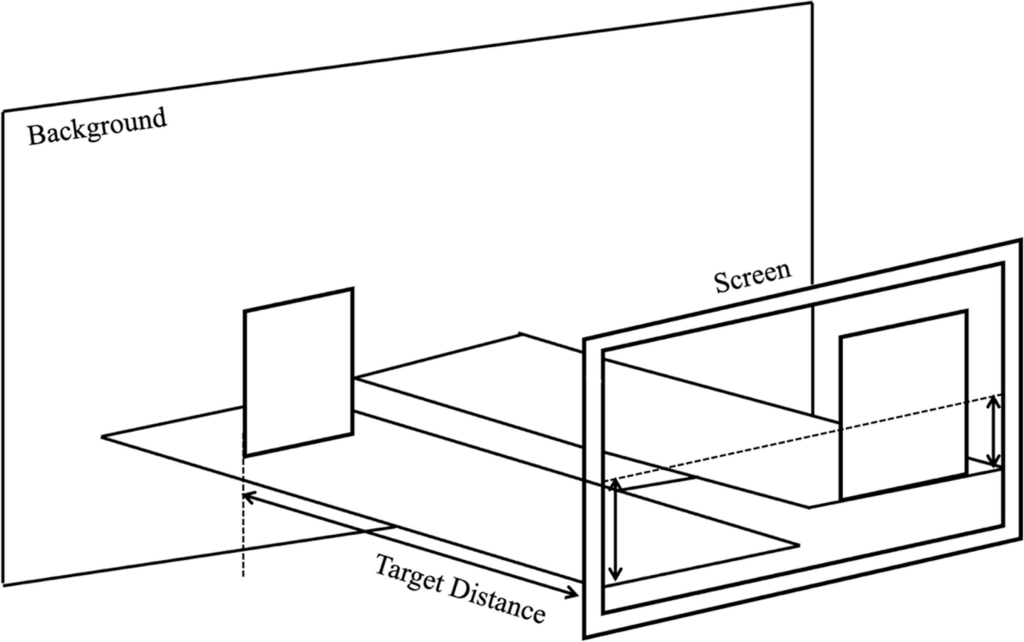

Viewing geometry

The task in current study

Abstract: Reaches guided using monocular versus binocular vision have been found to be equally fast and accurate only when optical texture was available projected from a support surface across which the reach was performed. We now investigate what property of optical texture elements is used to perceive relative distance: image width, image height, or image shape. Participants performed reaches to match target distances. Targets appeared on a textured surface on the left and participants reached to place their hand at target distance along a surface on the right. A perturbation discriminated which texture property was being used. The righthand surface was higher than the lefthand one by either 2, 4 or 6 cm. Participants should overshoot if they matched texture image width at the target, undershoot if they matched image shape, and undershoot far distances and, depending on the overall eye height, overshoot near distances if they matched image height. In Experiment 1, participants reached by moving a joystick to control a hand avatar in a virtual environment display. Their eye height was 15 cm. For each texture property, distances were predicted from the viewing geometry. Results ruled out image width in favor of image height or shape. In Experiment 2, participants at a 50 cm eye height reached in an actual environment with the same manipulations. Results supported use of image shape (or foreshortening), consistent with findings of texture properties used in slant perception. We discuss implications for models of visually guided reaching.

For more detail, please see https://www.sciencedirect.com/science/article/pii/S0042698922000359

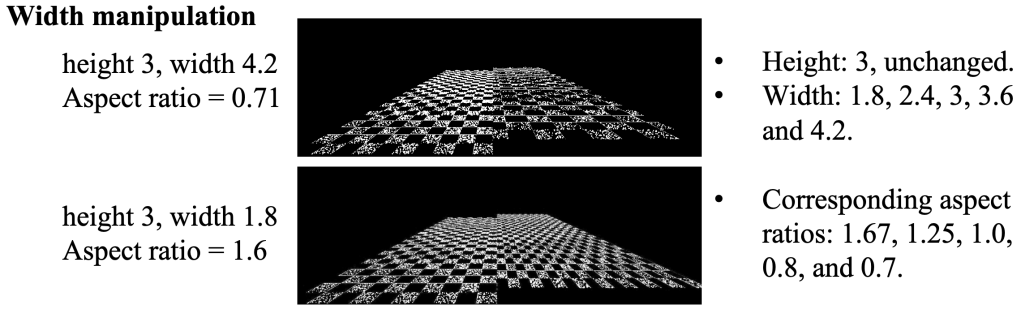

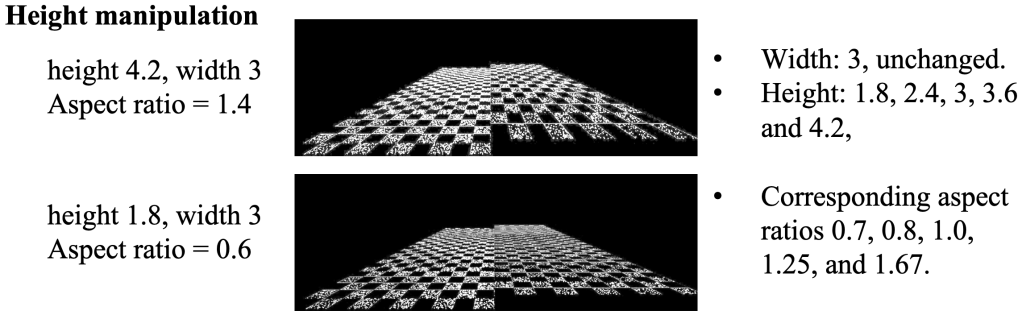

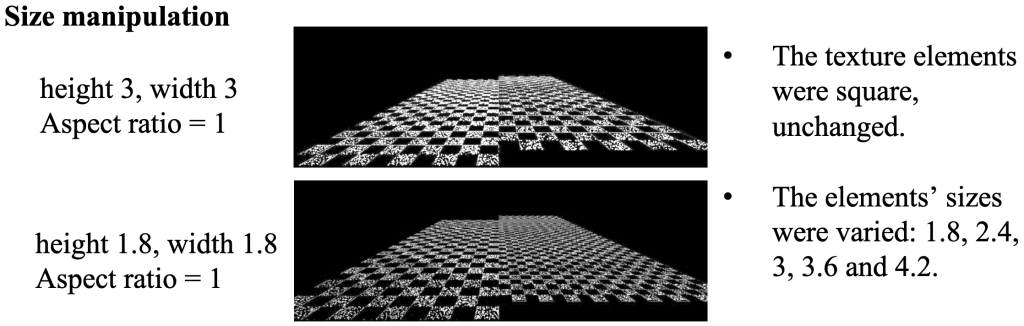

Texture’s properties used to perceive relative distance

Abstract: Bingham et al. (2022) investigated which property of the texture on a support surface is used to perceive relative distance and found it was the texture image shape. This study further investigated the use of image shape by manipulating texture image size and the aspect ratio of optical texture elements. We found that variations in the size of the texture elements influenced relative distance perception. However, when manipulating aspect ratio, changes in the width were not significant, while the image height was, which is consistent with Chen & Saunders (2020) showing that compression of optical texture was used in slant perception. Reconsidering Bingham et al. (2022), we concluded that the relative compression of optical texture (image height) is used as monocular information about relative distance.

Task employed in current experiment

The manuscript is in preparation. For more detail, please see https://jov.arvojournals.org/article.aspx?articleid=2801053

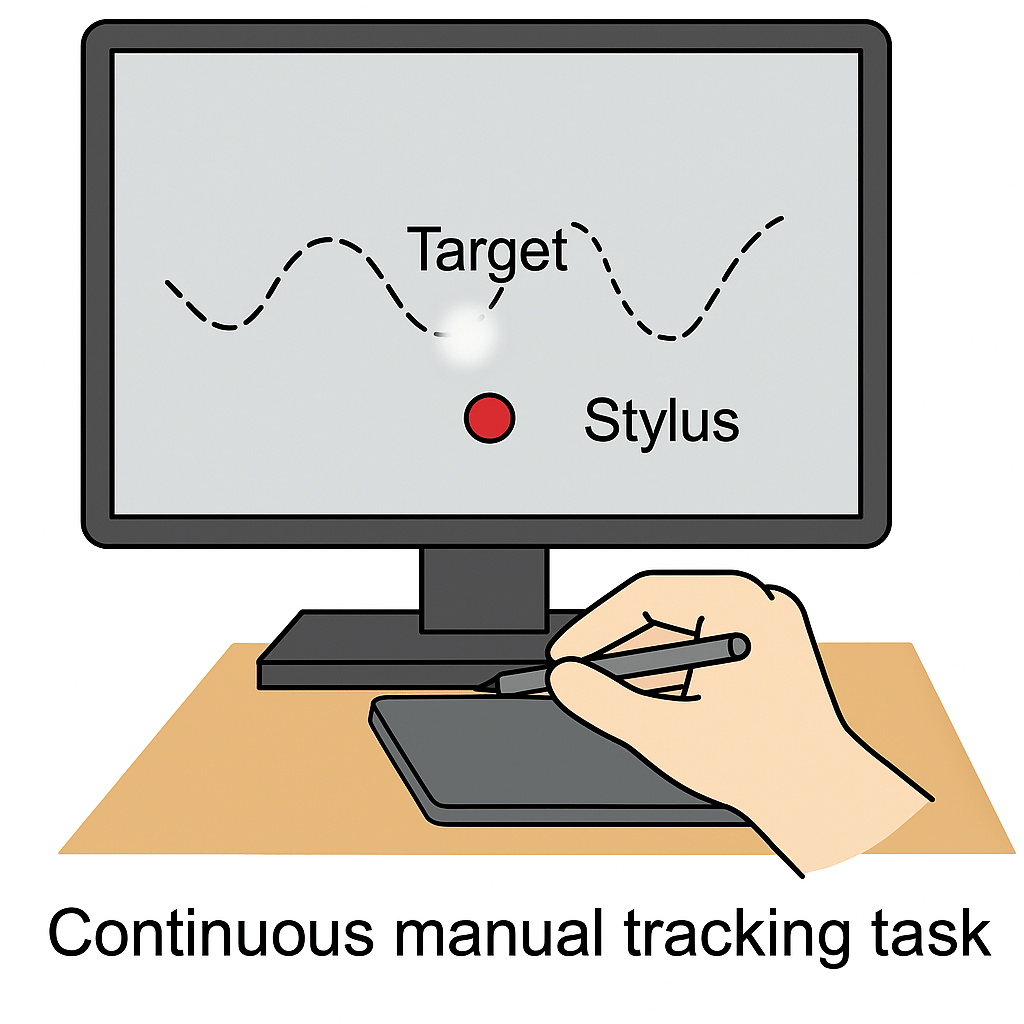

Predictability of trajectory modulates manual tracking

Research question: There is broad consensus that the human sensorimotor system is highly adaptable, but mechanisms underlying such adaptation remain the subject of ongoing debate. While dominant theories emphasize internal models that predict sensory outcomes by integrating available input , ecological approaches argue for adaptation simply through direct perception and prospective information. Situated within this theoretical context, this study examined how individuals systematically adapt to trajectories of target movements at different levels of predictability in a manual tracking task, with the aim of elucidating the mechanisms under which information redundancy is utilized in sensorimotor control. In doing so, the study seeks to identify a balanced solution that integrates components of both predictive internal model and real-time information to interpret human visuomotor control.

Abstract: We humans manifest a remarkable capability of adapting to varying external environments. However, investigations onto mechanisms that enable such adaptations raise several fundamental questions in the domain of sensorimotor control—most notably, whether and how we employ internal representations to facilitate more efficient feedforward control. The current study addressed this question by examining how trajectory predictability modulates manual tracking in human participants. A somewhat surprising finding was that no significant improvement was observed in tracking performance due to predictability, regardless of whether people were aware of it. However, analyses using a Kalman filter model revealed that participants did adapt their visuomotor processes by reducing their reliance on real-time visual input when tracking more predictable trajectories. These results suggest that, despite seemingly comparable tracking performances, participants increasingly relied on internal control mechanisms rather than real-time inputs when confronted with higher trajectory predictability. This study provides new insights into human sensorimotor control, highlighting adaptive use of information redundancy from the environments and the balance between feedforward and feedback mechanisms of human beings.

Task employed in current experiment

We analyzed stimulus–response lag and tracking error—quantified as the root-mean-square error (RMSE) of the response—to assess tracking performance in different conditions. To further investigate the contributions of internal models and real-time sensory input, we fit a Kalman filter model to the data. From the model, we extracted the Kalman gain (K), sensory noise (Rs), and motor noise (Rr) to quantify how participants integrated prediction and sensory feedback during tracking.

For more detail, please see:https://osf.io/42mp8/

The manuscript is under review. Please wait for update.

Stereo-motion

This is my dissertation. Please wait for updates.

Stereo-motion (or stereomotion) refers to the perception of depth and three-dimensional structure from the combination of binocular disparity (stereo cues) and motion cues. Our visual system integrates these cues to accurately interpret the depth and movement of objects in space.

This project investigates how humans use information from stereomotion channels to perceive 3D space and to guide action.

To prepare for it, I reviewed the literature of time-to-contact (TTC) and the tau model in the context of depth perception and goal-directed actions. Specifically, I reviewed the framework inspired by ecological theory that explicitly integrates stereo vision and motion (particularly optic flow) for recovering three-dimensional (3D) structure and guiding action. To explain how stereo vision and optic flow work, I conducted simulations demonstrating how the optical variable tau and tau-related parameters can facilitate depth perception based on two-dimensional (2D) optic images and effectively guide actions such as braking, reaching and catching. The simulation code and accompanying illustrations are available at: https://osf.io/7rvnp/. The preprint is available and the manuscript is under review. You can also play with the PR control model described in the manuscript at:https://www.desmos.com/calculator/qhcanxz7sd.

Part of these results is presented at the 2025 Annual Meeting of the Psychonomic Society. The poster is available for viewing.

Relevant content

Publications list

Related concepts

3. Online disparity matching

4. prismatic distortion

5. Structure from motion (SFM)

6. Tau-related movement control

Academic Activities

Conference

- 2025 Psychonomic Society annual meeting (Presenter)

- 2025 MathPsych (Attendee)

- 2024 Vision Science Society annual meeting (Presenter)

- 2022 Vision Science Society annual meeting (Attendee)

- 2019 Vision Science Society annual meeting (Presenter)

- 2019 OPAM annual meeting (Presenter)

Talk

🧪The Mesolab Talk

Topic: How Does Stereoblindness Modulate Reaching-to-Grasp?

Host: Prof. Peter Todd.

🧠 Cognitive Lunch Talk

Topic: Cue Utilization in Mapping Actual and Perceived 3D Slant: Evidence Supporting a Bayesian Framework for Perceptual Biases

Host: Prof. Tim Pleskac

Other

- Poster Presentation: Investigation of the Use of Optical Texture Sizefor Monocular Guidance of Reaching-to-Grasp. — Departmental Research Poster Session, Department of Psychological and Brain Sciences, Indiana University, Fall 2022.

- 2025 summer study group: My friend Hoyoung Doh has organized a study group for summer 2025 focused on connectionism and neural networks. We’ll be reading selected chapters from the Parallel Distributed Processing (PDP) volumes and working on hands-on coding projects that explore neural network models used in cognitive science research throughout this summer. If you’re interested, we’d love to have you join us! For more details, please visit: https://github.com/hydoh/connectionism-2025

- I finished a review on time-to-contact (TTC) and the tau model in the context of depth perception and goal-directed actions. Specifically, I reviewed the framework inspired by ecological theory that explicitly integrates a fourth dimension—time—as a critical element for recovering three-dimensional (3D) structure and guiding action. Time-dimensioned optical variables enable the direct and prospective control of movement, thereby reducing reliance on internal spatial representations and feedforward models. To support this framework, I conducted simulations demonstrating how the optical variable tau and tau-related parameters can facilitate depth perception based on two-dimensional (2D) optic images and effectively guide actions such as braking, reaching and catching. The simulation code and accompanying illustrations are available at: https://osf.io/7rvnp/. The preprint is available. You can also play with the PR control model described in the manuscript at:https://www.desmos.com/calculator/qhcanxz7sd